Could AI Replace Design Researchers?

In the top floor of London’s Design Museum, as dozens of visitors walk by and the rain hits the windows, a woman speaks with an AI bot called Spirit. It asks her how many partners she would like to have if she can live until she is 150. She giggles and answers. “Just one—and that is because my husband is next to me.” According to Spirit’s analytics, her answer is 33% sad and 12% joyful.

Spirit is a simulated AI, a provocation created by IDEO’s London Studio in response to a commission from the Design Museum. It asked designers and their teams—including Yves Behar of fuseproject and furniture designer Konstantin Grcic—to contribute to a project on the future of aging as a sequel to an exhibit of the same topic that they hosted 30 years earlier. The museum asked IDEO to explore what community might look like in the year 2047.

For our project, we aimed to start a dialogue about the role emerging technologies might play in the way people connect as a community. In the future, we may no longer need to drive our children to school, speak with anybody to go grocery shopping, or visit a human doctor. Technology often makes us more independent, and less reliant on human contact and support. To counter the social isolation that may arise, we developed the concept of a free and open-source social AI, a system built to foster strong and healthy communities by strengthening social bonds and keeping people active and mentally stimulated.

Creating the Exhibit

We designed the interactive part of the exhibition for three reasons: We wanted to give visitors a glimpse of what it would be like to interact with an artificial entity; we wanted to see how people would feel about the interaction; and, obviously, we like playful experimentation and prototyping.

Current AI technology is in its infancy when it comes to having a real dialogue with a human being. But just as autonomous cars learn with every mile they drive, these technologies will learn the more they interact with people. Current popular AI products (Siri, Alexa, etc.) listen to user commands and respond. We found it interesting to reverse that by having our Spirit actively ask visitors questions, and rather intimate ones, including: What makes you happiest? What would you be scared of forgetting? Would you trust an artificial intelligence to choose your friends? If you lived to 120, how many partners would you want to have?

The exhibit had two states: An idle state where visitors were encouraged to talk to Spirit and it displayed selected quotes from previous visitors and visualized the data it gathered through a generative visual design, and an interactive state.

The interactive dialogue state took place inside an audio booth. The system greeted visitors with an audiovisual message and a stylized speech-bubble that mediated the conversation. The system then asked different questions, engaging in deeper conversation over time. For example, Spirit might ask, "What makes you happiest," and the answers could vary from "rich relationships" to "when it stops raining," "playing football," or "creating new things and solving problems." Each answer created a new pebble in the visualization, the color, size, and shape of which were determined by an analysis of the length and the emotion of the response. When the visitor finished answering, the pebble merged into a sea of pebbles representing all of the interactions collected so far.

Visual interface design and data visualization by Studio Waltz Binaire

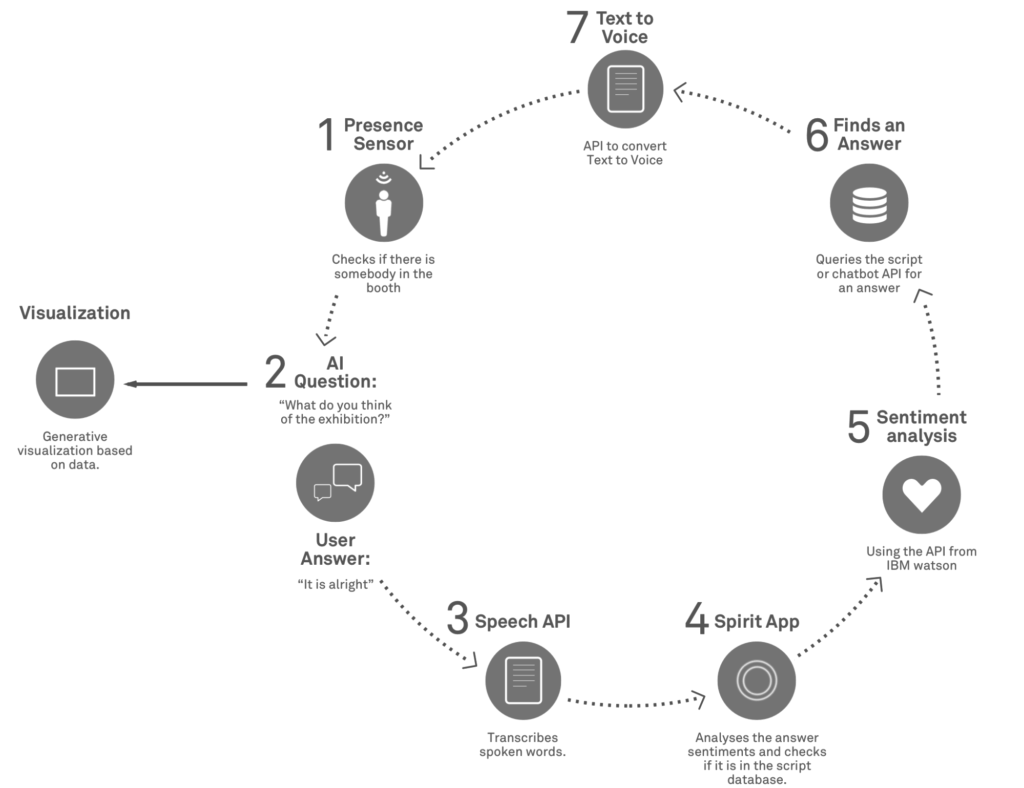

Building Spirit

The technical challenge of the exhibit was to have the system running for five weeks, seven days a week, eight hours per day. There were a lot of pieces talking to each other, and we needed to be able to track the feedback from thousands of visitors.

Spirit used a bespoke script to follow the conversations, and when the answers veered too far from what it had anticipated, it would send the answer to a chatbot API to find an appropriate answer. Unfortunately, the voice to text turned out to be not very reliable in the museum environment, so we focused on the scripted questions.

The technical setup consisted of two components: the audio booth and the large display facing it. To let Spirit know when somebody was present in the booth, we installed an Arduino with an ultrasonic sensor that communicated with an iPhone 6 via Bluetooth. The iPhone was connected to a directional microphone and a speaker.

Here is where the magic happened. On the phone, we ran a custom app. The app turned the voice into text using the Apple Speech Framework, and then analyzed the emotions in the answer using IBM Watson, an iconic AI developed by IBM, while also checking if the answer is in the pre-written script. If it wasn’t, it used another API to get an answer from the chatbot. The information about the answer and emotions was stored and sent to a custom program written in vvvv that communicated with the iPhone app via OSC.

Ambient noise was our biggest worry. It could have prevented the system from understanding visitors’ answers. Also, the position of the microphone was fixed, so reaching different visitor heights and voice volumes was a challenge. This actually proved to be a problem, as there were several interactions in which the voice was unintelligible.

The Data

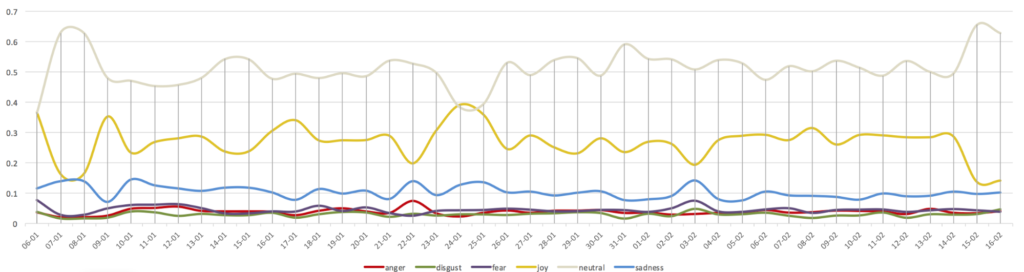

One good perk of the interactive system is that we had real-time access to the data, so we could follow how well the installation and exhibition were performing, and what the audience was saying. After the exhibition ended, we found a bit more time to use Python and Pandas in the analysis, and Excel to plot the results.

We had a total of 8996 individual sessions with Spirit during the exhibition, about 10 percent of the visitors to the Design Museum.

The emotion recognition system that we used, IBM Watson, is not yet totally reliable (as an example, “yes” was considered a 100% J=joyful answer). But with the amount of data we gathered, the system was still able to give some hints about visitor moods. And the variations in mood could be correlated to external events. For example, we saw a peak in feelings of joy on the 24th, when London hit its highest temperature after a cold January.

When we looked at the answers, we discovered that they seemed more spontaneous and playful than others given via written questionnaires. They were also more honest, suggesting that for some people, sharing intimately with an AI might be less intimidating than talking to a human interviewer. Others, though, were critical and disconcerted. It became clear that it is important to start a dialogue about how we want to interact with AI in our daily lives.

Though the experiment obviously didn’t culminate in a fully-formed product to help the elderly, the interaction model helped us create the kind of AI we would need to build community and increase social interaction, and allowed us to test people’s reactions to emotional and intimate questions from an AI. The experiment also directly related to the work we do with design research. We frequently send design researchers out into the field to talk to people to learn more about their needs and habits, and how we can design solutions to fit their lives. Our work with Spirit begs the question—could even a design researcher be replaced by an artificial researcher at some point? For now, we don’t have a lot of answers, but this experiment helped us push our understanding forward, and we’re excited about the AI-driven insights that will come in the future.

Words and art

Subscribe

.svg)